The European Exascale Software Initiative is a funded project of the 7th Framework Programme for research and technological development of the European Commission.

FP7 is a key tool to respond to Europe’s needs in terms of jobs and competitiveness, and to maintain leadership in the global knowledge economy. A large consortium of organisations work together to address the scientific and technical challenges and deliver the Exaflop performance of the new computer generation by 2020.

EESI mission is to build a vision and roadmap to help Europe develop such new generation of computing systems and achieve efficient Exascale applications.

Exascale computing requires a sustainable, long term and coordinated effort of several billions euros over 10 year.

EESI, supported by Europe, started in 2010. It has created a momentum, a dynamic, between HPC end users from academia and industry, computer scientists, technology and service providers. It has continued in 2012 with EESI2, a 34 months project.

150 experts have shared common issues, built a vision and produced recommendations to lead research for efficient Exascale applications.

What is exascale

Exa : greek ἕξ, six

International unit prefix = 1000⁶ = (10³)⁶ = 10¹⁸

1 000 000 000 000 000 000 = billion billion = quintillion

High Performance Computing is a strategic instrument to advance scientific excellence, help humanity solve its hardest problems, and sustain industry competitiveness.

By 2020 performances are forecast to be extreme, in the range of Exascale.

The invention of computers has enabled to process data and solve problems at increased speed and complexity. Such speed is measured in floating point per second, in short “flops”.

From the 70’s until now, 2015, the computing capacity has raised from about 100 million flops to petaflops (10¹⁵). In the last decade, the computational demand has tremendously increased with the advent of scientific discoveries, data volume generation, and complexity. This requires a new generation of computers made of millions of heterogeneous cores.

What order of magnitude? Exascale refers to thousand fold increase over current computing capacity: computers will be capable of at least one exaFLOPS, or a billion billion calculations per second, 10¹⁸ floating point operations per second.

What does extreme mean? Exascale computing power is believed to be the order of processing power of the human brain at neural level. It is also what we need to count each of the known sextillion stars of the universe in 20 minutes.

Exascale computing will be a significant achievement in computer engineering, implying outstanding technological breakthrough both in computations and in software.

Ambition

The consortium acts as external and independent representative of the European Exascale community.

EESI ambition is to support Europe exascale computing strategy by providing the appropriate guidance to build such capability and let Europe remain ahead of competition.

EESI2 is the second initiative of the type. The objectives of this project, is to focus on software key issues improvement, cross-cutting issues advances, and gap analysis.

EESI2 aims to:

- Contribute to the coordination and the monitoring of the European Exascale Open Source software production, towards an implementation.

- Produce a dynamic updated roadmap of Exascale industrial applications, Exascale applications for Climate, earth sciences, fundamental physics, life science with a particular emphasis on the breakthroughs and gap analysis.

- Produce a roadmap of Numerical Libraries, Software eco-system, scientific software engineering and programmability. Once again, emergence of breakthroughs in linear or non linear algebra or in particle simulation for example, will be monitored ex: eigenvalues of tensors?

- Follow up of research program in massively parallel stochastic programming, Uncertainties, Power, Performance, Data management, Resilience, with a particular emphasis on the breakthroughs and gap analysis.

- Exchange and dissemination

- Act as a pro-active European voice in the international community and propose an International Exascale Software InitiativePrepare periodic synthesis by key issues – Recommendations for EC, Funding agencies and R&D stakeholders

History

The European Exascale Software Initiative was born in 2008 after the FP7 first call. It has been accepted as a support action of the 7th Framework Programme for Research.

The first initiative was conducted by Total.

EESI1

From 2008 to 2011, the first initiative of the type federated the European community and built a preliminary cartography, vision and roadmap of HPC technology and software challenges in Europe.

The partners:

EESI2

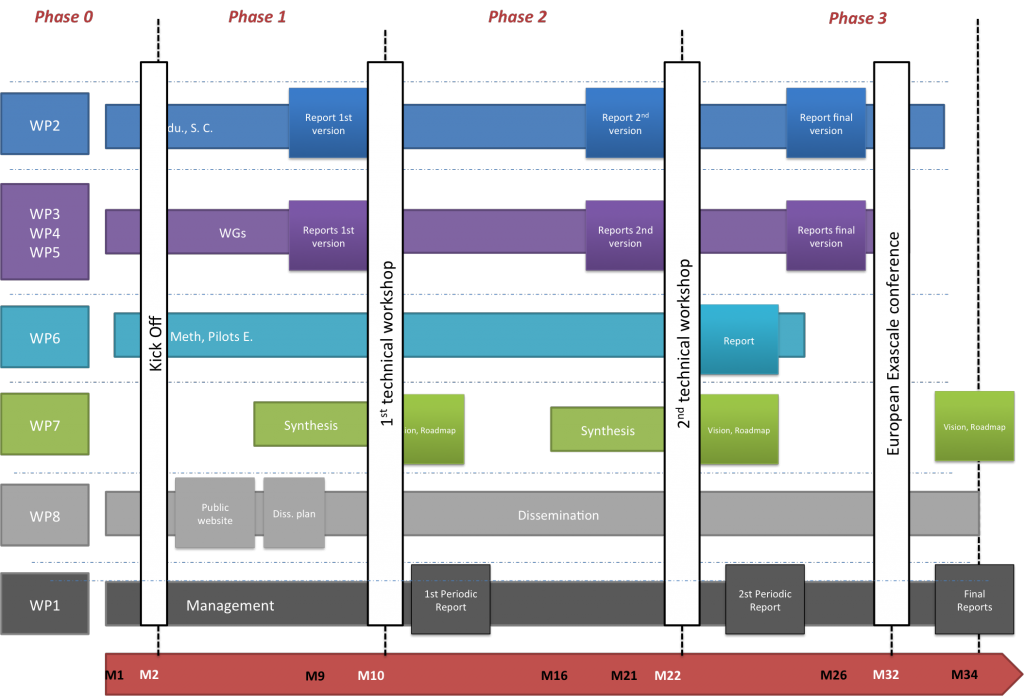

From 2012 to 2015, EESI2, the second funded FP7 project, has gone a step further toward implementation. EESI2 has established a structure to gather the European community, to provide periodically cartography, roadmaps and recommendations, and to identify and follow-up concrete impacts of R&D projects.

Scope of EESI2

The European Exascale Software Initiative (EESI) is leading the path toward the new computing architecture delivering extreme performances.

The community of EESI experts provide insights on key challenges in their specific domain.

They detect disruptive technologies, address cross cutting issues and develop a gap analysis to support the implementation plan of Exascale roadmap.

Contribution activities are delivered through a project structure, split into 8 work packages (WP), and sub-tasks or workgroups.

Eventually the project will compile a set of recommendations regarding key priorities in order to formulate a global vision and deliver tools that will be disseminated through a communication plan.

View the organisation structure

Management

Leader and co-leader: Philippe RICOUX (TOTAL) and Sergi GIRONA (PRACE).

Objectives: set up a management framework to ensure production of deliverables.

This work package covers project management: management bodies, management procedures and tools, quality plan, remote collaboration tools, etc.

- To ensure strategic, financial and contractual management of the consortium, including maintenance of the Consortium Agreement

- To ensure the day-to-day operational management of the project

Sub tasks:

T1.1: Project Global Management

T1.2: Project administrative management.

Education and coordination

Leader and co-leader: Philippe RICOUX (TOTAL), Ulrich Rude (U. of Erlangen).

Objectives:

- Investigate and describe state-of-the-art, trends, and future needs in HPC training and education.

- Establish and maintain a global network of expertise and funding bodies in the area of Exascale computing.

- Investigate and describe the landscape of co-design centers in the area of HPC and specifically Exascale computing. Monitor functioning of international existing centers.

- Act as a proactive European voice and representative into the International Exascale Software Community.

- Integrate within WP tasks, cartography update through continuous use of network.

Sub tasks :

T2.1 State of the art on funding agencies;

T2.2 State of the art on education courses and training needs;

T2.3 State of the art of worldwide Co-design centers;

T2.4 Towards a public collaboration.

Applications

Leader and co-leader: Stéphane REQUENA (PRACE – GENCI) and Norbert Kroll (DRL).

Objectives: extend, refine and produce application roadmap. WP3 is responsible to:

- Investigate key application breakthroughs, quantify their societal, environmental and economical impacts and perform a gap analysis between current situation and Exascale targets;

- Evaluate scientific and industrial community R&D activities, notably application redesign and development of multiscale/multiphysics frameworks;

- Foster the structuration of scientific communities at European level.

- Integrate cartography update through continuous use of network

Sub tasks :

T3.1: WG Industrial and engineering applications;

T3.2: WG Weather, Climatology and Earth Sciences;

T3.3: WG Fundamental Sciences;

T3.4: WG Life Science & Health;

T3.5: WG Disruptive technologies;

T3.6: Coordination.

Enabling technologies

Leader and co-leader: Rosa Maria BADIA (PRACE – BSC) and Herbert Huber (PRACE-LRZ).

Objectives: provide answers about the technologies and the tools that are considered essential for Exascale software. For example, a long time numerical analysis addressed solvers for stochastic PDE or complex equations. Discretization is a key issue in equation resolution. The level of parallelization is clearly “physically equation dependent”.

Exascale solvers require at least 1 or 2 more levels of parallelization, even when Exascale systems will be used by weak scalability.

Sub tasks:

T4.1: WG Numerical analysis

T4.2: WG Scientific software engineering, software eco-system and programmability

T4.3 : WG Disruptive technologies

T4.4 : WG Hardware and operating software vendors

T4.5: Coordination.

Cross-cutting issues

Leader and co-leader: Giovanni ERBACCI (PRACE – CINECA) and Franck Cappello (PRACE – INRIA).

Objectives: create and manage study on cross cutting issues for Exascale development to push the Exascale software initiative with the objectives of:

- Define Data management and exploration specific issues roadmap

- Define actions and follow up of projects on uncertainties

- Identify power management impact on the system design and on programmability

- Define concrete actions for coupling Architecture-Algorithm-Application at best

- Define concrete fault tolerance objectives at system and application level

- Survey new hardware and software technologies in order to monitor technologies that could influence the design of Exascale systems

- Integrate within WP tasks, cartography update through continuous use of network

Sub-tasks:

T5.1: WG Data management and exploration;

T5.2: WG Uncertainties;

T5.3: WG Power & Performance;

T5.4: WG Resilience;

T5.5: WG Disruptive technologies;

T5.6: Coordination.

Operational Software maturity level methodology

Leader and co-leader: Bernd MOHR (PRACE – JSC) and François Bodin (U. Rennes).

Objectives: prepare the creation of a European Exascale Software Centre to coordinate research, development, testing, and validation of Exascale software components and modules of the ecosystem. This group will:

- Develop and document a methodology for estimating the level of maturity

- experiment the methodology on 3 software stack components

- propose to Europe a structure adapted to Exascale software

Sub-tasks:

T6.1: Evaluation methodology set up

T6.2: Perform evaluation on 3 components.

Vision, roadmap and recommendations

Leader and co-leader: Philippe RICOUX (TOTAL) and Jean-Yves Berthou (ANR).

Objectives: global vision and perspective of Exascale software development, including a world state of art and future. Focus on software key issues improvement (gap analysis), enabling technologies and applications, cross cutting issue advances and international.

The periodic recommendations should be synchronized with EC R&D project agenda.

Dissemination

Leader and co-leader: Peter MICHIELSE (PRACE – SARA) and Sergi Girona (PRACE-BSC).

Objectives: disseminate all inputs of the project to the community. It aims at:

- Elaborating and executing a communication strategy;

- Organizing two internal workshops;

- Organizing a European Exascale conference.

Sub-tasks:

T8.1: Organization of technical workshops;

T8.2: Organization of European Exascale conference;

T8.3 Information Dissemination;

T8.4 EESI in Europe and world-wide.

The EESI project consortium has been designed to represent a cross-section of European and international key actors in the field of HPC. The partnership has a deep and broad expertise in all the technological and strategic aspects related to HPC.

The consortium, coordinated by TOTAL, is composed of:

- 2 contractual partners: TOTAL & PRACE

- 31 organizations which act as chairs and vice chairs of Work Packages and tasks

- Around 100 experts who contribute through the project tasks and working groups

Select the partner to discover its role and expertise

Contractual partners

TOTAL

Profile: World’s 5th ranked publicly traded integrated international oil company. Total has operations in 130+ countries, 100,000 employees, €189,5 Billion 2013 net income.

Research and development (R&D) is an integral part of the company strategy which enables to match its services to energy and environmental challenges, in particular in the domain of the Earth Science (Seismic, Reservoir Modelling, …).

Contribution to HPC: strong application demonstration partner to test and optimize robust and scalable numerical software.

EESI contacts:

Philippe RICOUX

PRACE

Profile: the Partnership for Advanced Computing in Europe is an international non-profit association with seat in Brussels. It has 21 member countries representing European governments and organizations.

PRACE enables world-class scientific discovery and engineering for academia and industry by providing access to high performance computing, data management, storage resources and services. The rigorous access process targets high impact projects to enhance European competitiveness for the benefit of society.

Contribution to HPC: created a pan-European Research Infrastructure (RI) that provides leading High Performance Computing (HPC) resources. PRACE has an extensive pan-European education and training effort devoted to help users and to prepare next generation of scientists and engineers.

EESI contacts:

Sergi GIRONA

Computing centres

DKRZ

Profile: German Climate Computing Centre provides the tools and associated services which are needed to investigate the processes in the climate system. Operates a supercomputer center to enable climate simulation

Contribution to HPC: Outstanding research infrastructure for model-based simulations of global climate change. Provides the technical infrastructure needed for processing and analysis of climate data

EESI contacts:

Thomas LUDWIG

GENCI

Profile: agency in charge of defining the French strategy in HPC for civil research. Its mission encompasses multiple roles from promotion of HPC in research, financing and setting-up supercomputers in French centers, to optimising utilisation by opening access of HPC equipment to all interested parties.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “CURIE” system with a peak performance of 2.1 Petaflops and 320 Terabytes of main memory

EESI contacts:

Stéphane REQUENA

Jülich Supercomputing Centre

Profile: institute at Jülich Forschungszentrum gathering a staff of 90 people and 35 third-party members. Explores architecture opportunities based on FPGA, Cell and GPU systems. It provides support and higher education to specific scientific communities through simulation labs an cross disciplinary groups.

Contribution to HPC: provides and operates supercomputer resources of the petaflop performance class, HPC tools, methods and know-how. Servers include JuRoPA, 200 Teraflop/s Intel-based and JUGENE 72-rack IBM Blue Gene/P petaflop system. JSC focuses its exascale activities on applications and algorithms

EESI contacts:

Bernd MOHR

Godehard SUTMANN

Leibniz Supercomputing Centre (Leibniz-Rechenzentrum, LRZ)

Profile: a competence center for large scale data archiving and backup. Active player in the area of HPC for over 20 years. It offers services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Herbert HUBER

SARA

Profile: National Supercomputing and e-Science Support Center in the Netherlands to support research by developing and providing advanced ICT infrastructure, services and expertise.

Contribution to HPC: hosts various large infrastructure services including 20 to 65 TF/s CPUs, >5000 core clusters, 5PB storage, 20PB tape capacity, and the BIG Grid (tier1 site for CERN

EESI contacts:

Peter MICHIELSE

Science & Technology Facilities Council

Profile: independent, non-departmental public body of the Department for Business, Innovation and Skills (DBIS). Provides grants, access to facilities and expertise particularly in fundamental sciences

Contribution to HPC: access to world-class facilities, including neutron sources, synchrotron sources, lasers and high-performance computing facilities

EESI contacts:

Mike ASHWORTH

Iain DUFF

The Barcelona Supercomputing Center (BSC)

Profile: national computing facility of Spain with a mission to research, develop and manage information technologies; it strives to be first-class research in supercomputing and HPC demanding applications such as life and earth science

Contribution to HPC: hosts MareNostrum supercomputer system (94 Teraflop/s on PowerPC, 20 TeraByte memory, 280 TeraByte disk space) and coordinates the Spanish Supercomputing Network (RES) representing 136 TeraFlop/s capacity from 7 supercomputer centres in Spain, offering 130 million CPU hours per year to scientists

EESI contacts:

Sergi GIRONA

Rosa BADIA

Jesus LABARTA

Ramon GONI

Research partners

ANR

Profile: French research funding organisation since 2005

Contribution to HPC: identifies the priority areas and fosters private-public collaboration to enhance competitiveness

EESI contacts:

Mark ASCH

Centre National de la Recherche Scientifique (CNRS)

Profile: governed-funded research organisation being the largest fundamental research organization in Europe, CNRS carries out research in all fields of knowledge, through ten scientific institutes

Contribution to HPC: CNRS operates the IDRIS supercomputing centre, giving expertise in HPC service provisioning to all research areas. CNRS supports EESI2 in the area of earth sciences, with a focus on big data from seismic processing, numerical modelling and computer sciences.

EESI contacts:

Sylvie JOUSSAUME

Commissariat pour les Energies Atomique et Alternatives (CEA)

Profile: French government-funded technological research organization active in low-carbon energies, defense and security, information technologies and health technologies

Contribution to HPC: CEA is an intensive user of Petascale computing and a major player in HPC in Europe. It has a strong expertise in the operation of large HPC centres

EESI contacts:

Romain TEYSSIER

DLR

Profile: Germany national research center for aeronautics and space in charge of the space programme

Contribution to HPC: computational aeroacoustics simulation codes

EESI contacts:

Norbert KROLL

INRIA

Profile: French public institute dedicated to research in information and communication science and technology (ICST). 8 research centres, 4300 employees, 250 million euros budget. Strategy of scientific excellence with technology transfer

Contribution to HPC: strongly involved in FP7 projects of which 85 in ICT domains

EESI contacts:

Franck CAPPELLO

Numerical Algorithms Groupg (NAG)

Profile: UK not-for-profit numerical software development company

Contribution to HPC: collaboration with wold leading entities to support academia and industy progress in developing and using supercomputing applications

EESI contacts:

Andrew JONES

TERATEC

Profile: French initiative with the mission to create a HPC and simulation ecosystem. Non profit organisation groupig industry users, technology providers and research centres.

Contribution to HPC: gathering companies around public an dprivate labs and a training institute close to a very large supercomputing centre (CEA)

EESI contacts:

Hervé MOUREN

Academic partners

Profile: one of the largest university in Germany. Scope from humanities to law, economics, sciences, medicine and engineering.

Contribution to HPC: anchored in a close network of interdisciplinary cooperations

EESI contacts:

Ulrich RUDE

German Research School for Simulation Sciences

Profile: school committed to research and education in the applications and methods of HPC-based computer simulation in science and engineering. It provides a Master’s and a doctoral program for next generation of computational scientists and engineers.

Contribution to HPC: its Laboratory for Parallel Programming specialises in tools that support simulation scientists in exploiting parallelism at massive scales. Example: Scalasca, a scalable performance–analysis tool

EESI contacts:

Felix WOLF

Moscow State University

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Vladimir VOEVODIL

The School of Mathematics

Profile: expanding department at the University of Edinburgh with 50 people, 13 post doc and 65students

Contribution to HPC: Operational research, applied mathematics and mathematical physics interest

EESI contacts:

Mark PARSONS

Mark BULL

Andreas GROTHEY

Technische Universität Dresden

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Matthias MÜLLER

The University of Manchester

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Lee MARGETTS

Universita del Salento

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Giovanni ALOISIO

University of Bristol

Profile: UK 4th ranked department in Computer Science. Microelectronics research group working on Energy Aware Computing (EACO)

Contribution to HPC: worked on energy efficiency subtask of EESI1, helped run the Energy-Aware HPC conference, and is driving the new Energy Efficient HPC consortium (EEHPC.com). Leading work on cross-cutting issue of power efficiency in EESI2.

EESI contacts:

Simon MAC INTOSH-SMITH

University of Nottingham

Profile: School of Pharmacy at the University of Nottingham rated N°1 in Research Assessment Exercice for Pharmacy.

Contribution to HPC: Research in the design and use of drugs and medicines that requires significant HPC activity

EESI contacts:

Charlie LAUGHTON

Industry partners

Centre Européen de Recherche et de Formation Avancée en Calcul Scientifique (CERFACS)

Profile: research organization to develop advanced methods for the numerical simulation and the algorithmic solution of large scientific and technological problems that requires access to supercomputers.

Contribution to HPC: parallel algorithms, code coupling, aerodynamics, combustion, climate and environment, data assimilation, and electromagnetism

EESI contacts:

Serge GRATON

CINECA

Profile: Private body created in 1969. Consortium of 37 Italian universities and more that operates several leading HPC datacentres. Member of key projects and DEISA. Coordonates HPC Europa2 and HPCWorld

Contribution to HPC: operates a supercomputing integrated infrastructure with 15000+ processors. 168 nodes, each with 32 cores and 128 GB of RAM, 1,8PTbytes storage. Peak power 100 Tflops. IBM Blue Gene P 1024 CPU quad core, 14 Tflops. It also operate the ENI supercomputer, 10 000 processors. Experience in HPC code parallelisation and optimisation

EESI contacts:

Giovanni ERBACCI

CSC

Profile: non-profit limited company owned by the Finnish state being the largest national centre in Northerne Europe in IT center for science. 220 people. operates the Finnish University and Research Network (Funet) with the largest collection of scientific software and databases.

Contribution to HPC: Coordinates EUDAT project to provide a sustainable pan European infrastructure for improved access to scientific data. Partner to key e-infrastructure development projects (eg PRACE)

EESI contacts:

Per OSTER

EDF

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Ange CARUSO

Alberto PASANISI

INTEL

Profile: a competence center for large scale data archiving and backup.

It has been an active player in the area of high performance computing for over 20 years and provides computing power on several different levels. It offers its services to universities in Germany and to publicly funded research institutions.

Contribution to HPC: since 2012 it operates the PRACE Tier-0 supercomputer “SuperMUC” system with a peak performance of 3 Petaflop/s and more than 320 Terabytes of main memory

EESI contacts:

Marie-Christine SAWLEY

Néovia Innovation

Profile: Consulting company in research and innovation.

Contribution to HPC: Numerical simulation, algorithm.

EESI contacts:

Thierry BIDOT

JCA Consultance

Profile: Consulting company in scientific computing

Contribution to HPC: Climate simulation

EESI contacts:

Jean-Claude ANDRE